The project consists of two main components, the AI model that generates the audio, and the arm mounted camera which interacts with the audience by tracking and following their face. The arm is inspired by any number of examples in science fiction (such as the Ralph MaQuarrie example from Star Wars here). There are a number of intentions in using the arm to interact with the audience. The first is to give the project a presence. I want it to provide a physical embodiment for the sound generating AI in the hope that this will give greater meaning to the audio being generated. Seeing the camera and that it is following their face, the audience would also be aware that they were being ‘watched’, something that would not be obvious with a static camera, and some potential audience members may find objectionable. Finally from a purely technical point, by moving the camera to track the audiences face, the image passed through to the sound generating AI can be ideally framed.

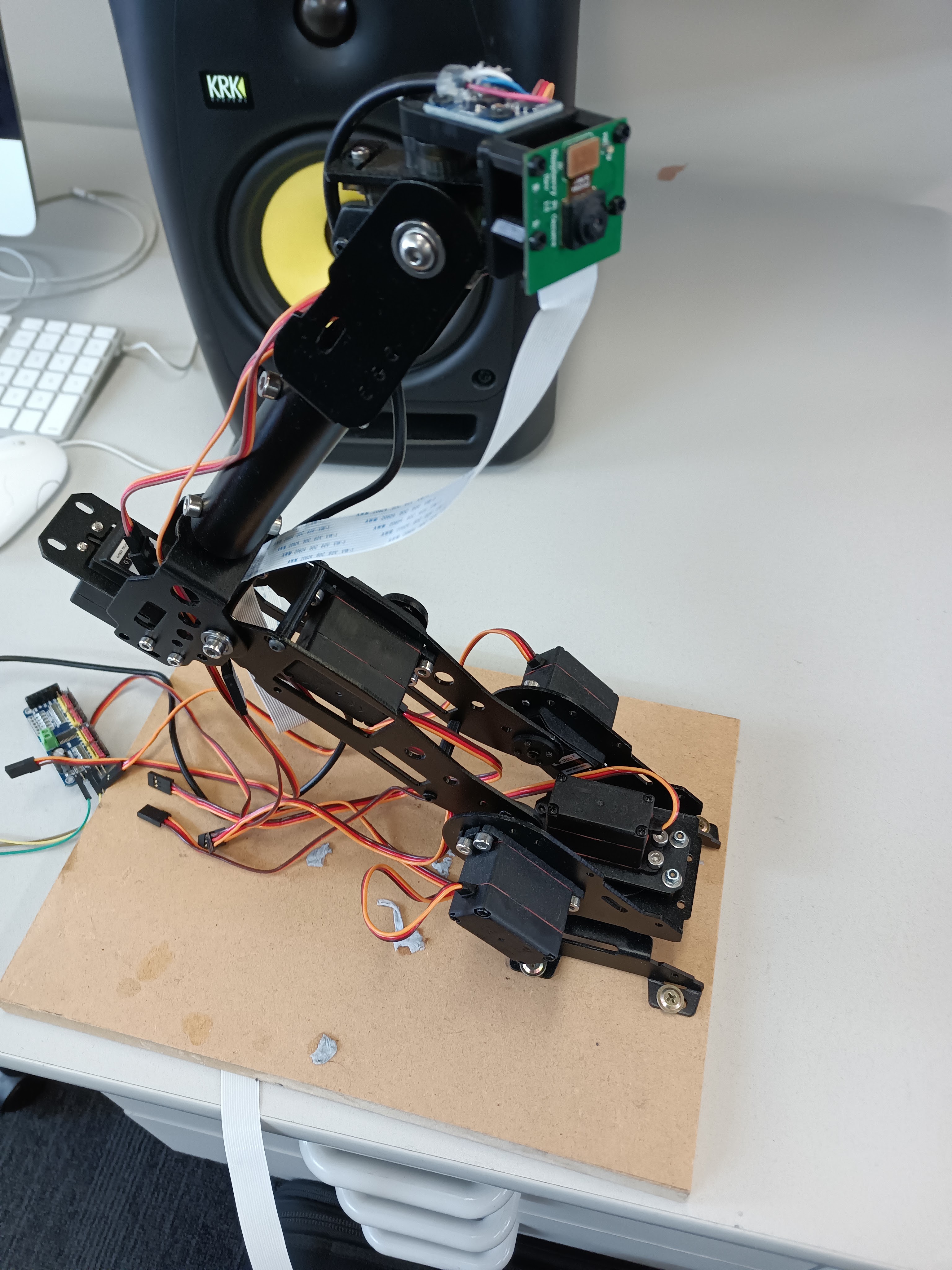

As an initial proof of concept I threw together a cheap servo based kit. Running OpenCV on a Raspberry Pi (with an additional boost from a Coral tpu) I was able to get it tracking faces quite reliably. Unfortunately an arm of this size ( roughly 300mm tall) was never going to satisfy what I wanted from the presence of the piece. To succeed in adding weight to the audio I felt the arm would need to meet the audience at eye level, I didn’t want the audience to be looking down on it. Unfortunately scaling the arm up that significantly presents something of a challenge. While there are robot arms large enough available they are intended for heavy industrial use, capable of lifting considerable weight and as such considerably too heavy and expensive for my use. Also I wanted to avoid the aesthetics of an industrial piece of equipment. This lead me to designing and building my own arm.

My hope was that because I am going to have a relatively light payload (compared to an industrial machine) I could have a tall enough arm without having to resort to using large expensive motors and gearboxes and I could keep the whole thing reasonably light. I thought the best approach would be to grab what motors I could afford and design and build the arm around them, so I purchased some of the brushless dc motors with harmonic gearboxes that were all over Aliexpress. I had hoped that with how many of these motors were available at a comparatively low price I would be able to find some examples of other people of using them on similar project, but I had no luck leaving me to figure out how to control them myself. Using the software available on the website from the motors OEM (www.yizhi.info) and the usb canbus adapter that came with the motors I was able to test the motors and get some idea of the torque they were capable of. Lifting several kilograms on the 1 metre long lever arm seemed no problem so I was reasonably confident they would work. Using a canbus sniffer while running the OEM software I was able to figure out the various canbus commands to get control information to and from the motors, the next step will be to integrate that control into ROS, more on that later.

For the main structure of the arm I purchased some lengths of aluminium tube which I am sure would work fine but for aesthetics sake and to save a little more weight I have also bought some sections of carbon fire tube. I had initially hoped to 3d print the connecting parts for the arm. Although the printng time for such large parts was fairly long it still made for quick prototyping compared to other methods. However, although the parts were strong enough, i.e they did break, they weren’t anywhere near stiff enough. The arm would shake and wobble when moving at any speed beyond a crawl and even when printed in expensive filaments, such as carbon filled nylon, the printed parts were never going to be of adequate rigidity. The next step will be to redesign the parts to be cut from aluminium. To do this, I have just finished building a router capable of machining aluminium plate. I may also look at molding the parts out of carbon fibre but will wait till I have functioning aluminum parts first as I don’t like the idea of having to make carbon parts more than once.

So I’m currently redesigning the arm in Fusion360. This not only to manufacture the required parts but to use as a control model in ROS, the robot operating system. Something that become quite a apparent when I saw the scale of the full sized arm was that the movement kinematics that I had bodged in the code for the small scale prototype arm weren’t going to work at full size. A much more comprehensive means of control would be required to prevent the arm from damaging itself and the audience it is to interact with. Using the ROS will allow for the arm and its environment to be virtualized and will make things like the face tracking easier to implement. But as is usually the case with software that is quite powerful, it can difficult to get setup and running, but I am to do it within the coming few months. At the moment development on the arm is on hold while I focus my attention on the sound generating AI model.